View all your Storage consumed in Microsoft Fabric – Lakehouse Files, Tables and Warehouses

One of the things I have found when working with my customers in Microsoft Fabric is that there is currently no way to easily view the total storage for the entire tenant.

Not only that, but it would also be time consuming and quite a challenge to then find out what is consuming the storage. Could it be large files or tables or warehouse tables?

In this blog post I will show you how using a Notebook you can get details of the storage across your Microsoft Fabric Tenant.

This is what it will look like after getting the data and then creating the semantic model.

I will give an overview of the important cells in the notebook. Where I will explain what I am doing.

At the end of this blog post I will have a link where you can get a copy of the notebook to try in your own Microsoft Fabric tenant.

NOTE: I do have the role of a Power BI / Fabric Admin which allows me to be able to get the information required.

As per the documentation below the caller must be either a Fabric Administrator or a Service Principal with the delegates scopes.

For getting all the Lakehouse and Warehouse Items the scope is “Tenant.Read.All or Tenant.ReadWrite.All”

Items – List Items – REST API (Admin) | Microsoft Learn

Getting a list of all the Lakehouse’s and Warehouses

I first must give a big thanks to Sandeep Pawar from his blog https://fabric.guru/programmatically-creating-managing-lakehouses-in-fabric which allowed me to easily get a list of all the items for the Lakehouse’s and Warehouses.

Line 15 is where I am specifying to only get the Lakehouse’s in my tenant.

In Line 16 and 17 is where I am authenticating using my own credentials.

The code is then going through and getting a list of all the Lakehouse’s in my tenant as shown below.

In the next code cell below I am then removing all of the Dataflows Staging Lakehouse’s

As I have shown in the past I did this using the Data Wrangler feature, which makes it very easy for me to filter.

Next, I did this for the Warehouses, where you can see in line 16 I specified “Warehouse”

I once again cleaned up any Warehouses that might have the DataflowsStaging (I do not think they are used in Warehouses but better safe than sorry I say)

Next, is where I am using the Service Principal that I created in my previous blog post (Configuring the Service Principal access Microsoft Fabric OneLake APIs – FourMoo | Fabric | Power BI) which has got the required permissions to access the Azure Storage APIs which will allow me to query the OneLake files.

The first part is where I am using the Azure Key Vault to access the Service Principal credentials.

The next part in the same code cell below is where I am testing the authentication to make sure that it is working successfully.

In line 46 I am putting in my WorkspaceID and LakehouseID to test the authentication.

As you can see the highlight below, I can see that the “Connection with OneLake is successful”

Now, finally this is the section where I now am going to iterate through all of the Lakehouses that I have and get a list of the files and file sizes in the code cell example below.

As shown in line 31, is where I am passing through the Workspace ID and Lakehouse ID to the OneLake API.

In lines 46 – 48, I am adding the Lakehouse ID, Workspace ID and Lakehouse Name to the dataframe.

In lines 49 – 51, I am changing the data types.

In lines 52 – 55, I am adding the current date, and then getting the Year, Month and Day to be used to partition the table.

Line 58 is where I am writing the table and partitioning when it is loaded.

The two next code cells are almost identical except for the API to get the Lakehouse or Warehouse Table details as shown below in line 32

NOTE: While the API URL looks identical in the data being loaded, they are different because it is being loaded from the df_Lakehouses_clean and df_Warehouses_clean

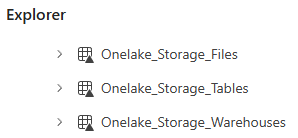

Once the notebook has completed, I can then see the data in my Lakehouse Tables.

UPDATE: 2024-10-31

Thanks to a comment from Marco, I realised that I had not updated a table in my subsequent blog post which queries the tables. Long story short, is I was missing the table called “WorkspaceDetails”.

I have now updated the notebook code below, which will run and get all the workspace details across your tenant using the Service Principal Credentials which has got Admin API access.

As in previous steps you will need update it to use your Service Principal Credentials as shown in lines 10 – 16

In the steps below I then query the groups Admin API and save the details to the table called “WorkspaceDetails”

This will then create the table which can then be used in the PBIT in my next blog post.

SUMMARY

In this blog post I have shown you how to get all the details of your storage within your Fabric Tenant.

Here is a link to the Notebook where you can download it and then modify the Azure key Vault or put in the credentials to successfully load the data.

Fabric/Blog – Get Files and Table Sizes.ipynb at main · GilbertQue/Fabric

Thanks for reading and any questions or comments are most welcome.

In the next blog post I will show you how I created the Power BI report, which allowed me to easily understand what is consuming my storage.

[…] Gilbert Quevauvilliers builds a report: […]

[…] If you want to know how I got this data, please read my previous blog post View all your Storage consumed in Microsoft Fabric – Lakehouse Files, Tables and Warehouses &#… […]