Part 2 – Storing the Log Analytics Queries in Azure Blob Storage (Power BI Query Usage and Performance Series)

Following on in my series, in this blog post I am going to demonstrate how to store Log Analytics Queries in Blob Storage.

This allows me to be able to store the Power BI Queries externally from Log Analytics and to have an easy way to get the data into my Fabric Lake house in later steps. To do this I am going to use a Logic App in Azure.

In this series I am going to show you all the steps I did to have the successful outcome I had with my client.

Part 1 – Enabling Log Analytics, generating the KQL queries.

Part 2 – Storing the Log Analytics Queries in Azure Blob Storage (This blog post)

Part 3 – Using Microsoft Fabric Dataflow Gen2 to store the data in the Lakehouse.

Part 4 – Creating a Power BI dataset and report using DirectLake.

Creating the Logic App in Azure

The first thing I needed to do is to create my logic app. Logic apps is very similar to Power Automate and I find logic apps has got all the functionality and is easy to use with additional Azure functionality.

I went into my Azure portal, I then clicked on Add Resource, and searched for Logic App.

I then clicked on the Create dropdown and selected Logic App.

In the Create Logic App page, I selected my subscription and resource group.

I then gave it a meaningful name (making it easier to identify later) “FourMoo-PBI-Queries”.

Next, I selected my region as to where my log analytics workspace is stored.

I did not enable the log analytics for my logic app because there is some good logging already available in the logic app.

Finally the plan type I chose was “Consumption” based because the running of the query and exporting to Azure Blob storage runs really quickly.

If required I then put in my tags and on the final screen I could review and click on “Create” to create my Logic App.

Once it has been created, I then click on “Go to resource”

Exporting my log analytics query to Azure blob storage

Now that I had created my logic app, I will now create the steps to export my query to Azure Blob storage.

When I went to my logic app, I was presented with the Logic Apps Designer screen asking me what I wanted to do. I clicked on Recurrence because I want to run this once a day at 0:01 UTC.

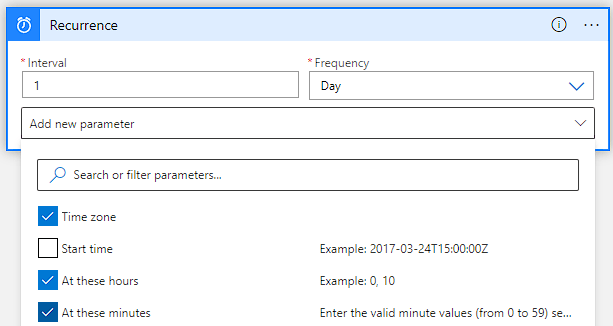

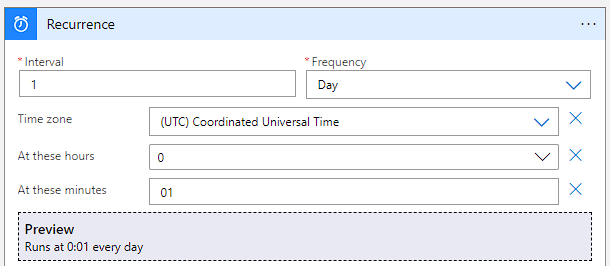

I was then presented with the recurrence step, and I changed the Interval to 1, the Frequency to Day.

I also clicked on the drop down for “Add new parameter” and selected Time zone, At these hours and At these minutes.

Next, I then selected my Time zone, and the hours and minutes as shown below. I can also see under Preview when it will run.

I clicked on New Step and searched for “run log analytics query” and then selected “Run query and list results”. Underneath I could see it says Azure Monitor Logs which means it can run Log Analytics Queries.

Because this is the first time, I am using the Azure Monitor Logs I was then prompted to give it a name and authenticate as shown below, and I clicked on Sign in.

NOTE: You will need to have access to the Log Analytics workspace to be able to run queries.

Once I had created the connection and successfully authenticated, I was then presented with the screen for me to put in all the details required.

I then clicked on the drop-down menus and selected the details needed to connect to my log analytics workspace.

NOTE: If you want to find out the details of your Log Analytics workspace, such as the subscription name, resource group and resource name, you can go back to the log analytics workspace and click on the overview page to view it as highlighted below.

I then used the query I had blogged about in my previous blog post in the Query section.

I then clicked on Save, just to make sure I have saved the work I have already done.

The next 2 steps are where I parse the JSON outputs and compose it into a format that I can then use to save it to Azure Blob Storage.

I searched for parse JSON and selected “Parse JSON” as shown below.

I then put in the body from my previous step.

Now it might be a challenge to create the JSON schema, what I find easiest is what I did was to run the query from Log Analytics and save it as a JSON file. Then what I can do to generate the JSON schema is click on “Use sample playload to generate schema”

I open my JSON file and copy all the contents into the Window as shown below, then click on Done.

It then creates the JSON schema for me once again as shown below.

The next step is to then take the JSON data and compose it so that we can then save it in subsequent steps.

I searched for Compose and selected “Compose”.

In the compose step, where it is looking for the Inputs, I clicked in the Inputs box and when it opened the window I selected “Body” from my previous step called “Parse JSON”.

This is what it looked like after I had added the Body.

The next step is where I am going to save this data to my Azure Blob Storage location (where I will also specify the location and filename).

I searched for “Azure Blob” and selected “Create Blob (V2)”

Now before completing the steps below, I first needed to create a connection, in my example I have created a Managed System Identity in my Logic App and given this Managed System Identity the Role of “Storage Blob Data Contributor” in my Azure Storage Account.

PIC

Next, in my Azure Blob Storage Step, I gave it the name of my Azure Storage Account name and selected the Authentication type as “Logic Apps Managed Identity”

Once I had created the connection I could then put in the details of where I want to save the JSON output from the previous steps.

NOTE: Before completing the steps below make sure you have the following information:

- Storage Account Name

- Folder Path (the container where you want to store the files)

- Blob Name (how you want to name your files)

- Blob Content (This is the actual values from the previous steps)

In my working example it would be the following:

- Storage Account Name: mvpnloganalytics

- Folder Path: pbi-log-analytics

- Blob Name: Dynamically created based on current date

I then put in my Storage Account Name. Make sure that there are no spaces at the end.

Next, I then clicked on the folder icon next to the Folder Path, this also allowed me to make sure that my Managed Identity had the right permissions to view the Azure storage account containers.

I selected pbi-log-analytics

Now for the Blob Name, I wanted this to be dynamic so that it would create the file based on the current date. I did it by clicking into the “Blob Name” and then I clicked on Expression as shown below.

I then put in the following expression which is going to create a file with the folder structure and name.

For example, if today’s date was 2023-08-26 it is going to create the following: /pbi-log-analytics/2023/08/26/2023-08-26.JSON

I had to do this individually because I had to put in the forward slash, each time make sure to click on Expression before entering the expressions below.

formatDateTime(addDays(utcNow(),-1),'yyyy') / formatDateTime(addDays(utcNow(),-1),'MM') / formatDateTime(addDays(utcNow(),-1),'dd') / formatDateTime(addDays(utcNow(),-1),'yyyy-MM-dd') .JSON

Once completed the Blob Name should look like the following below (if it has got the fx then it is the expression)

I then clicked into Blob Content, and from the selection I selected the “Outputs” from the previous “Compose” Step.

This is what the step “Create blob (V2) looked like once completed.

I then clicked on Save to save my logic app.

Finally, I am now at the point where I can test the running of my logic app. At the top I clicked on Run Trigger and clicked on Run.

I could now see that it had run successfully.

But, to make 100% sure I need to check that the file has been created in my Azure Storage account. As shown below I can see the file has been created along with the folder structure.

Summary

In the second part of my blog series, I have shown you how I extracted the data from the log analytics query and then save it to Azure blob storage.

The next part of the series is where I will use the Dataflows Gen2 in Fabric to take the data from the Azure blob storage and store it into a lake house table in Microsoft Fabric.

Thanks for reading any comments or suggestions are most welcome.

[…] Gilbert Quevauvilliers wants some long-term storage: […]